The expression EO PIS appears in multiple industries and usually points to structured, end‑of‑cycle information pipelines. In many organizations, it is read as End‑of‑Period Information System (finance, performance management) or End‑of‑Process/Operation Information System (manufacturing, IT operations). Whatever the expansion, the goal is consistent: collect, validate, consolidate, and publish trustworthy summaries when a process, shift, sprint, or accounting period closes. This guide explains what the concept covers, why it matters, how to implement it, and which metrics and controls you should expect from a mature deployment.

EO PIS definitions and background

At its core, EO PIS encapsulates the practice of delivering authoritative snapshots when a defined operational window ends. Historically, this started as spreadsheet-based closing packs. As data volumes and compliance expectations grew, companies formalized those packs into automated systems with version control, auditability, and strong governance. In modern data stacks, the term may describe a layer that triggers at the end of a period, materializes curated tables, calculates KPIs, and distributes them to finance, operations, or executives.

EO PIS in business reporting and finance

In finance, EO PIS commonly underpins monthly, quarterly, and annual closes. The platform aggregates ledger entries, reconciles sub-ledgers, performs variance analyses, and produces management and statutory reports. Typical outputs include profit and loss (P&L) summaries, cash flow statements, segment profitability reports, and working capital dashboards. By automating these tasks, the system shortens the close cycle, increases traceability, and reduces the risk of manual errors that can cascade into misstatements or regulatory penalties.

EO PIS in technology and data engineering

For IT and data teams, EO PIS is the scheduled orchestration that kicks in when an operational run, a batch window, or a deployment cycle ends. It collects logs, error counts, latency histograms, resource utilization, and service-level compliance metrics. The collected evidence feeds post‑mortems, capacity planning, and continuous improvement loops. When architected well, this layer is event-driven, idempotent, observable, and fully versioned—so every “period end” can be replayed or audited.

EO PIS in manufacturing and logistics

In plants, warehouses, and supply chains, EO PIS closes the information loop at the end of a shift, work order, or distribution cycle. It compiles throughput, scrap, changeover times, OEE (Overall Equipment Effectiveness), downtime causes, and energy usage. Supervisors use the outputs to rebalance crews, schedule maintenance, and improve takt times. In highly regulated sectors (e.g., pharma or aerospace), the same framework ensures batch genealogy and traceability are preserved and reportable.

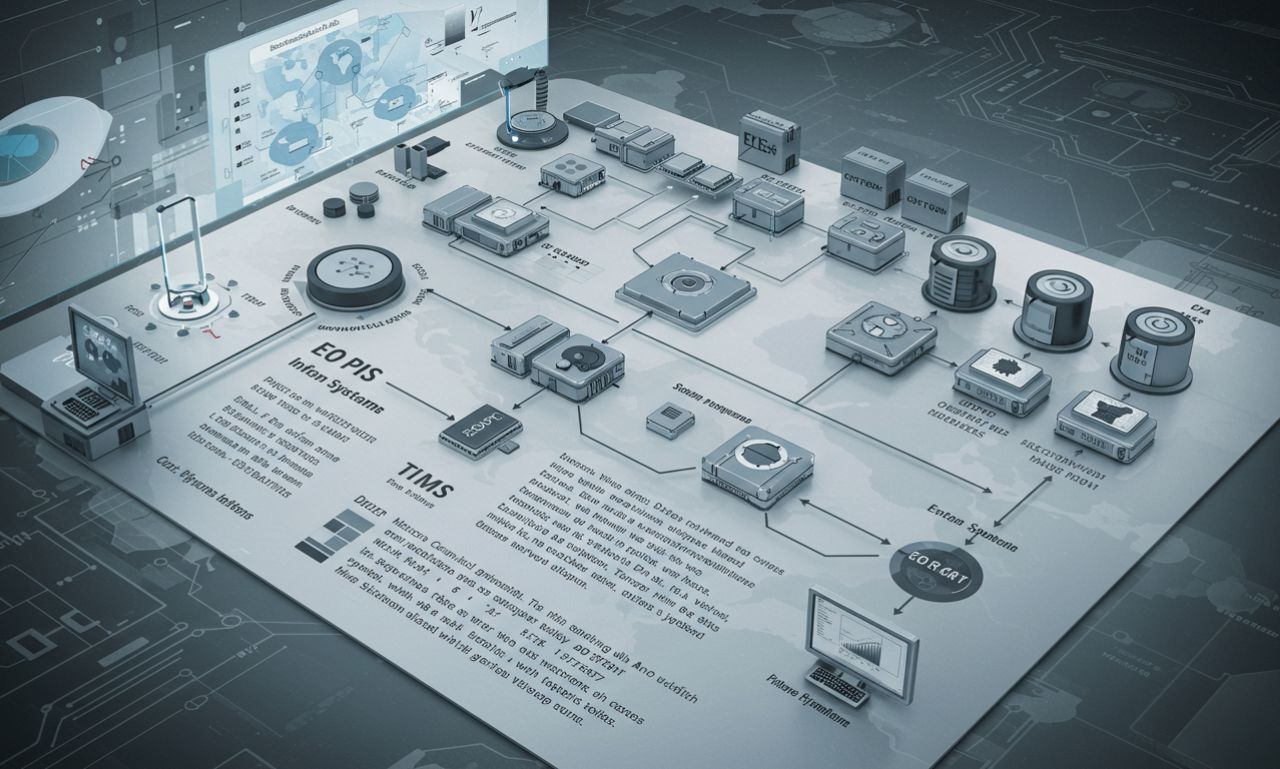

EO PIS architecture: the building blocks

A robust EO PIS typically includes several coordinated layers:

Data ingestion in EO PIS

Connectors and CDC (change data capture) streams extract data from ERPs, MES, CRMs, and telemetry platforms, landing it reliably in a raw zone with schema tracking.

Transformation & validation in EO PIS

Business rules, reconciliations, and data quality tests (null checks, referential integrity, threshold alerts) run in DAG-based orchestrators. Failed tests block final publication.

Storage & modeling in PIS

A curated layer (data warehouse or lakehouse) hosts star/snowflake models, slowly changing dimensions, and periodized fact tables ready for consumption.

Presentation & distribution in PIS

Dashboards, pixel-perfect financial statements, APIs, and scheduled emails serve executives, analysts, auditors, and operations teams with the finalized outputs.

Observability & audit in EO PIS

Lineage graphs, logs, and immutable audit trails prove who changed what, when, and why—crucial for SOX, ISO 27001, or industry-specific audits.

EO PIS implementation roadmap

Rolling out EO PIS successfully requires structured governance and phased delivery:

-

Define the “period”: month-end, sprint-end, batch-end—agree on the operational boundary.

-

Map critical data sources: finance ledgers, MES, SCM, HRIS, monitoring stacks.

-

Codify rules: KPIs, reconciliation logic, tolerances, exception workflows.

-

Choose the stack: ingestion tools, orchestrators (e.g., Airflow, Dagster), warehouse/lakehouse, BI, and observability.

-

Automate testing: unit tests for transformations, data quality tests, contract tests on schemas.

-

Pilot: start with one domain (e.g., monthly financial close) to prove value.

-

Scale & harden: add domains, implement RBAC, lineage, backup/restore, and SLAs.

-

Continuously improve by iterating on metrics, reducing manual touchpoints, and benchmarking cycle-time reductions.

KPIs and metrics tracked by EO PIS

An EO PIS should surface metrics that evaluate both business performance and the health of the information process itself:

-

Close cycle time (e.g., days to monthly close)

-

Number of manual journal adjustments

-

Data quality score across critical entities

-

SLA adherence for data availability and report delivery

-

Reconciliation exceptions opened/closed per period

-

OEE, scrap rate, downtime minutes for manufacturing contexts

-

Mean Time to Detect (MTTD) and Mean Time to Resolve (MTTR) for IT ops

-

Regulatory filing timeliness and error rate

Governance, compliance, and security in EO PIS programs

Because PIS outputs often feed auditors, regulators, and the C-suite, governance is non-negotiable. Core practices include:

-

Role-based access control (RBAC) to restrict sensitive ledgers or HR data

-

Data lineage and change logs to ensure repeatability and evidence trails

-

Encryption at rest and in transit, secrets management, and key rotation

-

Segregation of duties between data engineers, finance controllers, and report approvers

-

Automated retention and purge policies aligned with GDPR/CCPA and sectoral rules

-

Attestation workflows where owners sign off on finalized period reports

Common PIS pitfalls and how to avoid them

Even mature organizations stumble when implementing EO PIS:

-

Ambiguous ownership: assign data product owners and stewards up front.

-

Manual overrides without traceability: enforce controlled adjustments with audit logs.

-

Schema drift and brittle pipelines: adopt contracts, automated tests, and schema registries.

-

Over-centralization that slows teams: balance federated domain autonomy with shared standards (think Data Mesh with strong platform governance).

-

Ignoring observability: without metrics, logs, and lineage, diagnosing period-end failures becomes a matter of guesswork.

Future trends shaping EO PIS

The next wave of PIS solutions will be smarter, faster, and more autonomous:

-

Event-driven, streaming-first closes that shrink the gap between “operational now” and “period end”.

-

AI-assisted reconciliations that flag anomalies, propose correcting entries, and learn from historical resolutions.

-

Data contracts formalized as code, negotiated between producers and consumers to reduce breakages.

-

Composable finance and ops stacks where PIS capabilities are delivered as reusable services.

-

Continuous compliance with real-time control monitoring rather than after-the-fact checks.

EO PIS FAQ

What does EO PIS stand for?

Common expansions include End‑of‑Period Information System and End‑of‑Process/Operation Information System, depending on the domain.

Is PIS only for finance teams?

No. IT operations, manufacturing, logistics, and even product analytics teams use the same pattern to close information loops.

Do I need a data warehouse to run PIS?

Not strictly, but warehouses or lakehouses greatly simplify modeling, governance, and repeatability.

How often should PIS run?

As often as your operational cadence requires, such as hourly, daily, shift-end, month-end, or quarter-end.

What’s the quickest win?

Automate reconciliations and data quality checks for one critical period-end report, then scale.

Conclusion

In an environment where decisions are scrutinized, timelines are compressed, and regulators demand transparency, EO PIS provides the structured, auditable layer that turns raw operational noise into trusted, end‑of‑cycle insight. Implement it with clear ownership, robust data quality, and automation-first principles, and you’ll shorten closes, improve reliability, and empower teams to act with confidence.